Pourquoi les réseaux neuronaux profonds ne sont pas des boîtes noires [EN]

07/23/2019, by Renaud ALLIOUX

And why you can use it for critical applications

Consistently with any technological revolution, AI — and more particularly deep neural networks, raise questions and doubts, especially when dealing with critical applications.

Often considered as “black boxes” (if not black magic…) some industries struggle to consider these technologies.

Will I be able to certify it? How could I ensure reproducibility and explain incidents if they ever occur? Will customers or users be comfortable with putting security and safety in the hand of software that “designs itself?”.

These questions are key to increase deep learning adoptions in many fields such as defense, aerospace, autonomous vehicles or even medical technologies.

In this article, we will explain why recent advances make such technologies safe and secure, and as suitable as any other, even for critical applications.

First off, it works. It even works better than anything else!

Before even thinking about reproducibility or certification, the first questions to answer are “Is there any alternative?”, “Do I really need deep learning?”

The answers are, respectively: no and yes.

Lots of other machine learning or expert system technologies work very well for simple issues, or if you have a fixed number of solutions (for example, lots of things can be achieved thanks to NLP without deep neural networks). In fact, even within the world of machine learning, the number of applications where you specifically need to use deep neural networks is very limited.

But for these specific kinds of problems, there is nothing remotely close in term of performances.

Image recognition is a very good example.

When AlexNet architecture, which can be considered as the first implementation of a modern Deep Neural network, came out in 2011, it beat all competition by more than 15% on Imagenet (standard image recognition challenge), and most of this competition was already based on simple neural networks and machine learning algorithms.

In 2014 DeepFace blasted the competition on human face detection challenge by 27% at 97.35%, very close to the human score of 97.5%. Since then, all winning entries in open sources challenges for image recognition have been using deep learning algorithms.

From China to South America, all universities and researchers came up with the same conclusion: the only way to achieve or beat human performances on complex image recognition tasks is to use Deep Neural Networks. The same conclusion can be found for complex reinforcement learning tasks such as playing Go or Starcraft. All serious competitors are based on Deep Neural Network architectures.

Today, all competing methods, such as feature engineering, decision trees or fuzzy logic, end up with inferior performances and very low generalization capabilities. Maybe we will be able to see some changes within the next 15 to 20 year, nobody can anticipate future research, but it is very unlikely that any “conventional” algorithm will beat deep neural networks in the short term.

Tests do mean something.

The most common criticism when dealing with Deep Learning technology is the inability for humans to “understand” the process. To “ explain it” with a simple language and compare it to a human decision.

Why is this neuron activated and what does it do?

Yes, this can be hard (it has been noted that it is not simpler for any complex computer vision algorithm….) but this is why testing algorithms is important. And, especially for deep neural networks, tests mean something.

A common misconception is that the training process of a deep learning algorithm is like “storing images in a library”. The algorithm will store lots of labeled scene in training and when it is tested, if the scene is close to a scene in the library, it will be able to recognize it. This is not how deep neural networks work!

When you train an algorithm to detect planes on satellite images, the algorithm will not “store” the plane images, it will actually learn what a plane IS on these images. In other words, what are the key features in the picture that make this object a plane and that one not: a plane has wings, fuselage, a tail, engine, it can be of any color, and it is usually bigger than 10m and smaller than 80m, etc. Not only the network learns to detect these features, but it also learns which features are necessary, which are optional and how those features can be hierarchized.

So, back to testing, if your training base is well designed, and your testing base is representative in term of image quality and object corresponding to what your use cases will be, then testing PROVES your algorithm performances on a certain set of image characteristic — or “domain” in mathematical language.

The “trick” comes from the interpretation of this domain or from answering the question, “is this image in the same domain as the testing set so I can be sure I would have the same performances in testing and prediction?”.

Indeed deep learning algorithms do not use images information like humans. Noise, compression artifact or blur can trigger/un-trigger certain neuron while not having any impact on human interpretation. However, this can actually be measured. Several methods allow estimating the confidence and robustness of an algorithm with a given testing/training set (for example Deep k-Nearest Neighbors: Towards Confident, Interpretable and Robust Deep Learning). In other words, you can actually say “this image is in my validation domain or not” thus alerting the user that you can face a case not covered by the algorithm or where performances were not tested.

Deep learning can be explicable

Grief towards deep learning methodology is often about “how it decides”. We often hear the term “black box” and the fact that “even mathematicians do not grasp why and how they work”.

This misconception comes from the fact that the network “learns” and is not designed, but we actually do understand every step of the process.

From retro-propagation to convolution, every step and module of a deep neural network is understandable and explainable for an expert. However, due to the complexity of the learning process, it is tough to expose explanation in a language relevant for a client or a use case, in other words, “yes the cat on this smartphone image is detected here because of certain neuron activations which have been configured this way because of backpropagation… but what does it actually mean for me?”.

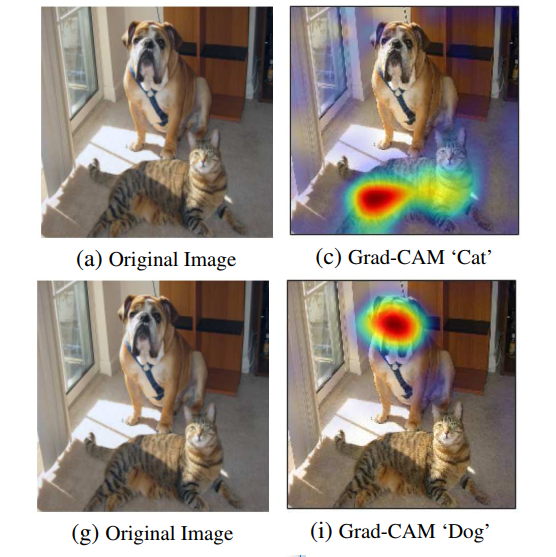

Fortunately, there are several methods to help users visualize “why” the AI decided to behave a certain way. In the case of image detection for instance, heat mat visualization (eg: Grad-CAM) allows understanding why and where the network activates on the picture and “why” it chooses to behave that way.

We see here that the “dog” classification is mainly focused on the head of the animal, meaning it chooses to classify it because of its jaws, eyes, ears…while the “cat” decision was done more thanks to the general shape, color, and posture of the animal.

Dozens of other tools and methodologies enable this kind of interpretation, for example, Bayesian neural networks can flag where the algorithm is “uncertain” of its choices.

Towards standardization

All these methods and technics allow the validation of algorithms, give confidence estimations or flag input which can create abnormal behavior. They are key to deploy efficient deep learning for critical applications and convince customers to trust the technologies.

In the end, it all goes back to trust. Deep learning is not different from any kind of new technology, it is up to engineers and data scientists to present the right tools for users to understand it and eventually trust it.

To achieve that, we strongly believe that the deep learning community should push toward standard protocols and software for testing, explicability and robustness evaluation and actively take part in the certification process for norms to be relevant to the technology.

This is why Earthcube is taking part in the working group for ISO certification of deep learning algorithms in Europe (AFNOR/CN JTC1/SC 42).