Super-résolution pour l'analyse de l'imagerie satellitaire [EN]

How image analysts and object detection algorithms can benefit from the latest advances in single-image super-resolution.

10/10/2019, by Thierry GOLDER

This is a scene everyone has seen in a detective movie or series: when the scientific police(wo)man is asked to “enhance” a poor-quality image to reveal an unsuspected detail that is key to solve the case. For decades, this made actual computer vision engineers go crazy because it was complete science fiction. Today, using the deep learning techniques that have been developed within the last 5 years, we can get one step closer to this kind of magic.

At Earthcube, many of our clients rely on satellite imagery analysis. This analysis is often performed by humans looking at images with a ground resolution of about 30cm. This means that a vehicle is a 4x8 pixels blob that can be hard to identify. It also means that the image analyst must rest their strained eyes regularly, as this task can be pretty hard on them.

Using super-resolution, we can make their work easier and faster, allowing them to analyse more images and to better identify what they are looking at. In the following example, reading the name of the boat’s company is easier on the super-resolved image. Moreover, the compression artefacts and the noise are greatly reduced.

A little bit of history on single-image super-resolution

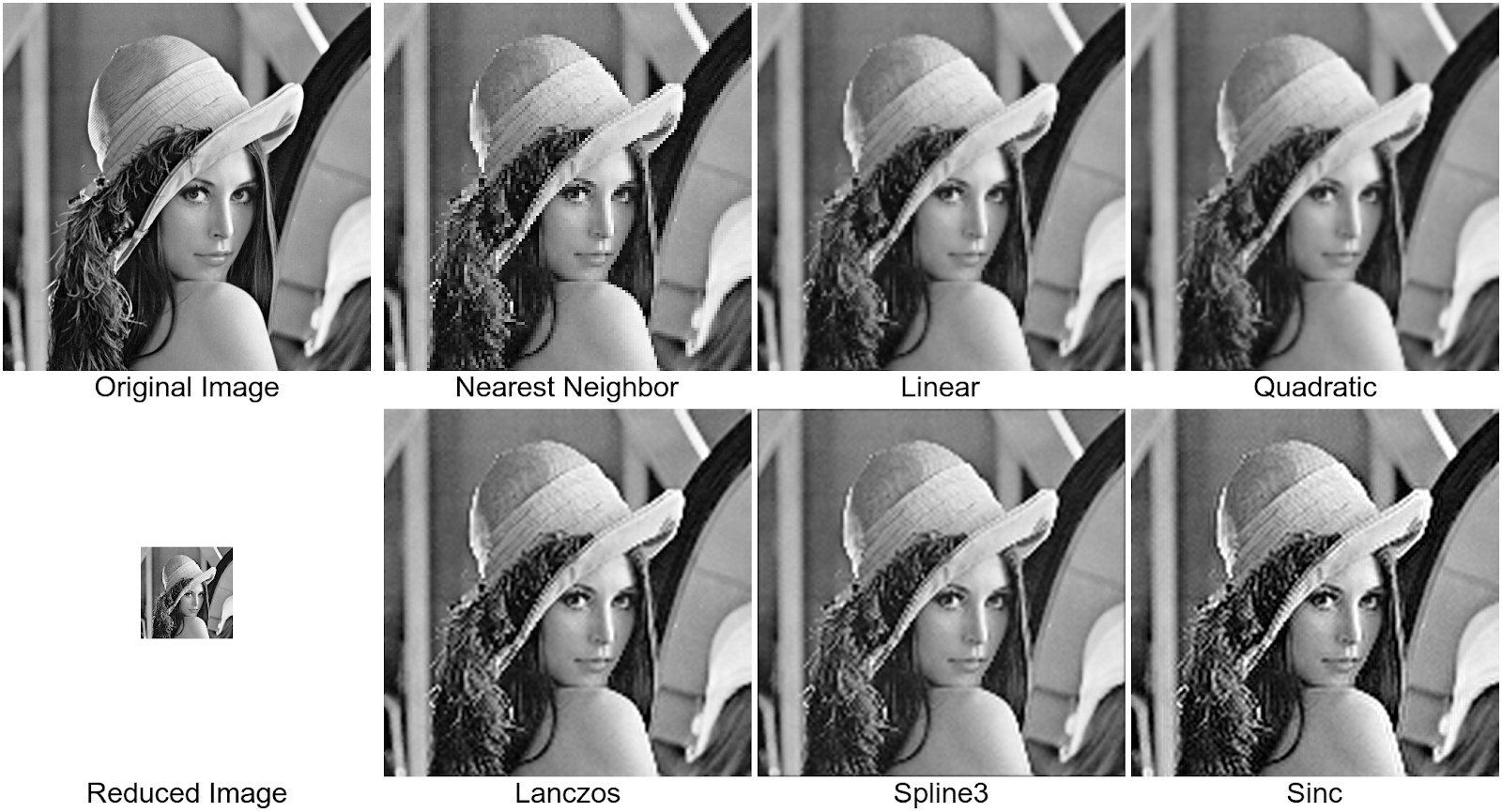

Since the early 60s, researchers have tried to increase image resolution to exceed the nominal performances of acquisition devices. This need became even stronger as computers became common products and screen definition kept increasing. Computer vision methods were called to the rescue. Interpolation algorithms were used to compute the “enhanced” image. Many techniques have been developed, each one trying to overcome its predecessors’ drawbacks: nearest neighbour, linear, quadratic, spline etc. As can be seen in the following pictures, none is perfect and it is always difficult to find the most appropriate one for a given type of image.

In all of these methods, the number of tunable parameters remains very small. And as for every computer vision task, the advent of deep learning and convolutional neural networks with thousands of parameters was a complete game-changer. The use of these networks allows for reaching performances that were previously impossible. Super-resolution from a single image is among the tasks that benefit from this technological leap.

Gains from super-resolving satellite images

At Earthcube, one of our main tasks is detecting objects like cars in satellite or aerial images. As said before, with a 30cm resolution image, a car is 4 pixels wide and 8 pixels long. Such a small object can be easily confused with a small garage or a dumpster. Only sharp outlines allow a human eye to distinguish real vehicles from other objects. The good thing is, this is exactly was super-resolution is made for!

We tried various super-resolution ratios. To our surprise, multiplying by 4 or 8 the size of an image is much more useful than multiplying by 2. Our understanding is that when upscaling by a high factor, the network has more degrees of freedom to learn how to reconstruct details. The two examples below are using an upscaling factor of 4 between the original image and the super-resolved one.

In the case of this plane, an image analyst using the original image classified it as a Y8, without any information of its nationality. However, when using the super-resolved image, it was possible to detect the drifts at the tips of the elevators and the rostrum behind the tail. Moreover, the Chinese ideograms on the side of the plane became more visible. This allowed classifying the plan as a Y9, a Chinese plane.

In this situation, the super-resolved image allows to better distinguish the individual pipelines that are close and can pass under each other. This may make following a given pipeline much straightforward. Moreover, the image gets less noisy and the compression artefacts are greatly reduced, making the work of the image analyst easier.

Technical choices

Today, two types of neural networks are used for single-image super-resolution: Generative Adversarial Networks (GANs) and architectures based on Residual Networks (ResNets).

At Earthcube, we chose to develop a super-resolution algorithm by using ResNets. As these networks use residual information (the difference between the output and the input of a layer) to learn how to add details to the original image, we find that the risk of the network “inventing” information not present in the original image is much lower than with GANs which reconstruct the whole image.

As a first step, we selected a training dataset composed of photos extracted from DIV2K, the traditional super-resolution challenge dataset. Interestingly, this training dataset did not contain any remote sensing images, which was a good way of seeing if our network would generalise well when we would apply it to our satellite images. We dedicated a lot of attention to the way we generated the low-resolution images from the high-resolution ones, as it is known to have a strong impact on the network’s performances.

We trained various networks, mainly based on the VDSR architecture, for different upscaling factors: 2, 4, 6 and 8. We found that it was generally better to have the highest possible ratio, in terms of the quality of the image produced. However, the higher the upscaling ratio, the bigger the super-resolved image gets. With that in mind, we found that a ratio of 4 was a good compromise: good quality with a reasonably-sized output image.

We chose to train the network using Adam optimizer, which works well for most of our use-cases. As for the loss, we used a simple L1 loss calculated between the upscaled image and the real high-resolution image. Obviously, there is room for improvements on this aspect and we are actively working on more sophisticated losses to guide the network to a better operating point. However, the L1 loss was surprisingly efficient to get very good results.

One tool, many applications

At Earthcube, such a super-resolution network can be used in many aspects of our processes. The first thing we did was to use it to make the job of people labelling images easier. As they spend hours segmenting vehicles or classifying aircrafts, increasing the quality of the images they are looking at means speeding their work, increasing the quality of their classification and boosting their well-being. This is something that many of our clients could also benefit from.

But if this enhancement can be useful for humans, it can also benefit machines! The first test we have performed shows that our detection algorithms work better when we use super-resolution as a pre-processing, especially when the images have a resolution a bit higher than 50cm, either due to instrument limitations or to a high viewing angle. The example below shows that the effect can be very important in difficult cases.

Conclusion

We are making many other tests to measure how much we can increase the performances of our solutions using super-resolution, including also image segmentation and classification. But it is already clear that this is a powerful tool to have and that the number of applications is huge, both at Earthcube and for our clients.

In satellite imagery, where technology is touching the limits of what physics allows, using software to go beyond what is possible today is a dream come true.